2.43 – 2.31 billion years ago

There are some interesting parallels between chemistry on the one hand, and linguistics on the other. Remarkably, a recent article makes a strong case that there is an actual historical connection between the science of linguistics and the science of chemistry. Specifically, Mendeleev’s construction of the Periodic Table of Elements was probably influenced by Pāṇini’s classic generative grammar of Sanskrit, the Aṣṭādhyāyī. This was written somewhere around 500-350 BCE. It has been said to be as central to India’s intellectual tradition as Euclid’s Elements is to the West’s. It probably reached the attention of Mendeleev thanks to the work of his friend and colleague, the Indologist and philologist Otto von Böhtlingk, who translated it.

The physicist Eugene Wigner wrote about “the unreasonable effectiveness of mathematics in the natural sciences.” (Here is a cute recent example where repeated rebounding collisions produce successively close approximations of pi.) Perhaps Mendeleev’s debt to Pāṇini via von Böhtlingk is an example of the unreasonable effectiveness of linguistics.

Here’s more on the parallels:

Chemistry plays a big role once Earth forms. Different mineral species appear, with different chemical compositions. Magnesium-heavy olivine sinks to the lower mantle of the Earth. Aluminum-rich feldspars float to the top.

Chemistry is an example of what William Abler calls “the particulate principle of self-diversifying systems,” what you get when a collection of discrete units (atoms) can combine according to definite rules to create larger units (molecules) whose properties aren’t just intermediate between the constituents. Paint is not an example. Red paint plus white paint is just pink paint. Mix in a little more red or white to make it redder or whiter. But atoms and molecules are: two moles of hydrogen gas plus one mole of oxygen gas, compounded, make something very different, one mole of liquid water. Add in a little more hydrogen or oxygen and you just get leftover hydrogen or oxygen.

A lot of important chemical principles are summed up in the periodic table.

On the far right are atoms that have their electron shells filled, and don’t feel like combining with anyone. Most, but not all the way, to the right are atoms with almost all their shells filled, just looking for an extra electron or two. (Think oxygen, O, with slots for two extra electrons). On the left are atoms with a few extra electrons they can share. (Think hydrogen, H, each atom with an extra electron it’s willing to share with, say, oxygen.) In the middle are atoms that could go either way: polymorphously perverse carbon, C, star of organic chemistry, with four slots to fill and four electrons to share, and metals, that like to pool their electrons in a big cloud, and conduct electricity and heat easily. (Think of Earth’s core of molten iron, Fe, a big electric dynamo.)

Another example of “the particulate principle of self-diversifying systems” is human language. Consider speech sounds, for example. You’ve got small discrete units (phonemes, the sounds we write b, p, s, k, ch, sh, and so on) that can combine according to rules to give syllables. Some syllables are possible, according to the rules of English, others not. Star and spiky, thole and plast, are possible English words, tsar and psyche are not (at least if you pronounce all the consonants, the way Russians or Greeks do), nor tlaps nor bratz (if you actually try to pronounce the z). Thirty years ago app, blog, and twerk were not words in the English language, but they were possible words, according to English sound laws.

You can make a periodic table of consonants.

Across the top are the different places in the vocal tract where you block the flow of air. Along the left side are different ways of blocking the flow (stopping it completely –t-, letting it leak out –s-, etc.) The table can explain why, for example, we use in for intangible and indelicate, but switch to im for impossible and imbalance. (The table contains sounds we don’t use in English, and uses a special set of signs, the International Phonetic Alphabet, which assigns one letter per phoneme.) This is why a book title like The Atoms of Language makes sense (a good book by the way).

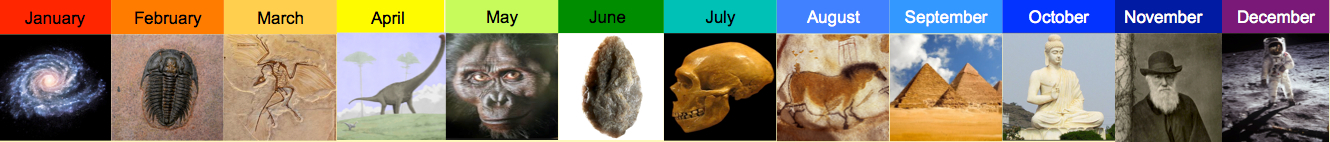

So sometimes the universe gets more complex because already existing stuff organizes itself into complex new patterns – clumps and swirls and stripes. But sometimes the universe gets more complex because brand new kinds of stuff appear, because a new particulate system comes online: elementary particles combine to make atoms, atoms combine to make molecules, or one set of systems (nucleotides to make genes, amino acids to make proteins) combines to make life, or another set of systems (phonemes to make words, words to make phrases and sentences) combines to make language.